Our proposed planner-agnostic online-mapping evaluation metric captures how online mapping systems correctly cuts down search volume.

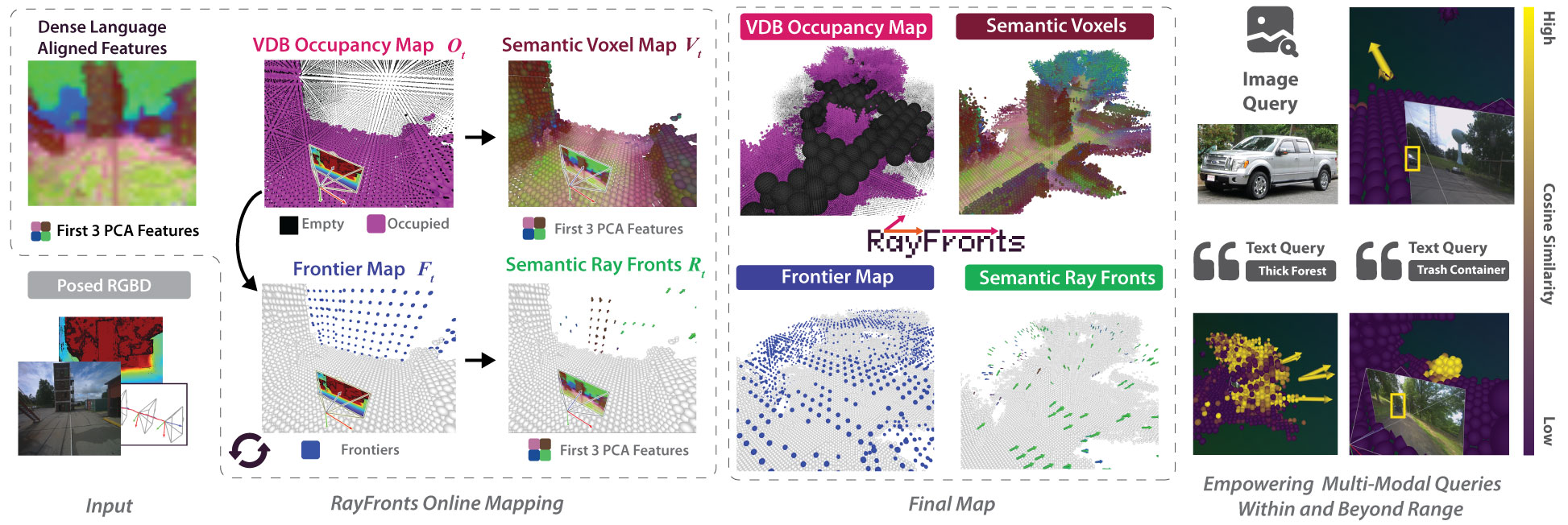

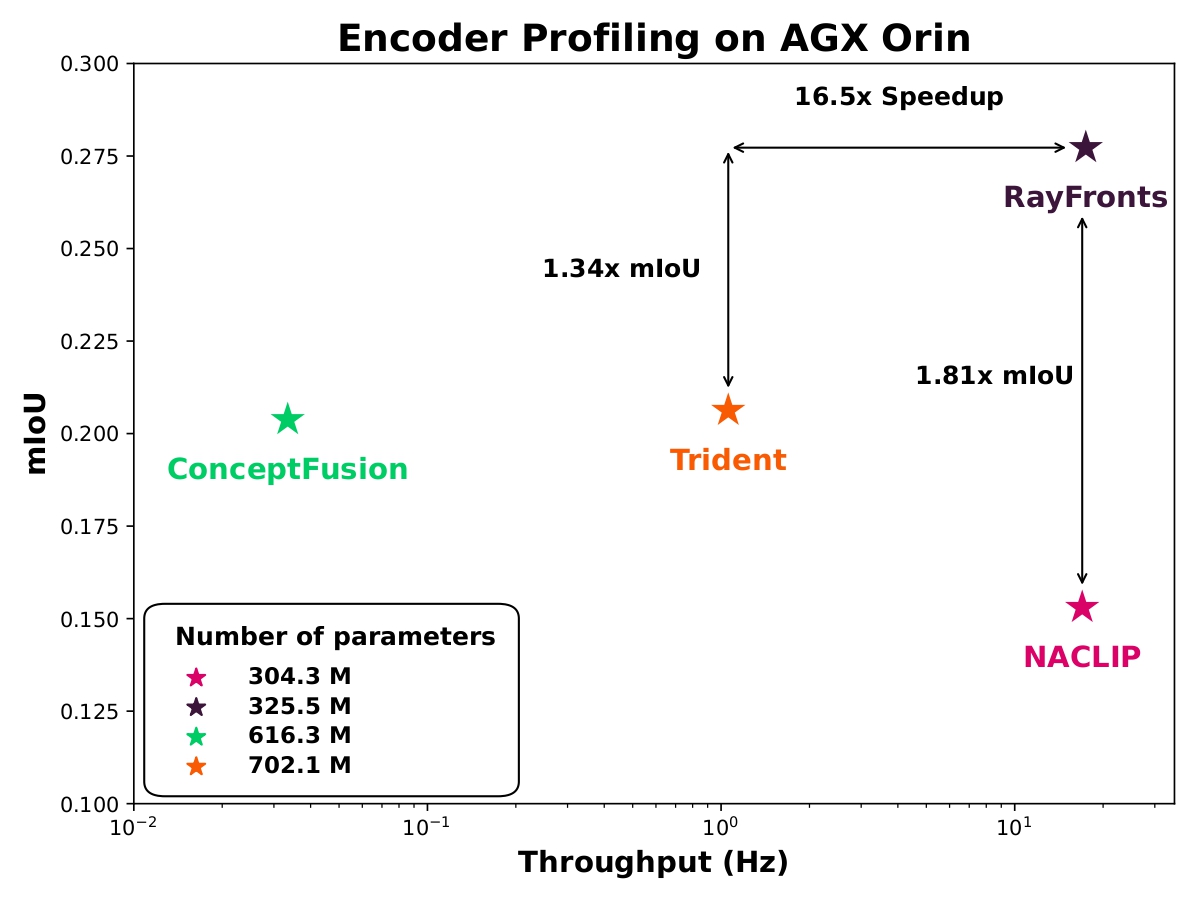

Open-set semantic mapping is crucial for open-world robots. Current mapping approaches either are limited by the depth range or only map beyond-range entities in constrained settings, where overall they fail to combine within-range and beyond-range observations. Furthermore, these methods make a trade-off between fine-grained semantics and efficiency. We introduce RayFronts, a unified representation that enables both dense and beyond-range efficient semantic mapping. RayFronts encodes task-agnostic open-set semantics to both in-range voxels and beyond-range rays encoded at map boundaries, empowering the robot to reduce search volumes significantly and make informed decisions both within & beyond sensory range, while running at 8.84 Hz on an Orin AGX. Benchmarking the within-range semantics shows that RayFronts's fine-grained image encoding provides 1.34x zero-shot 3D semantic segmentation performance while improving throughput by 16.5x. Traditionally, online mapping performance is entangled with other system components, complicating evaluation. We propose a planner-agnostic evaluation framework that captures the utility for online beyond-range search and exploration, and show RayFronts reduces search volume 2.2x more efficiently than the closest online baselines.

Overview of our online mapping system, RayFronts is designed for multi-objective & multi-modal open-set querying of both in-range and beyond-range semantic entities. Given posed RGB-D images, we first extract dense features with our fast language-aligned image encoder. Then, posed depth information and features are used to construct a semantic voxel map for in-range queries. In parallel, RayFronts maintains a VDB-based occupancy map to generate frontiers, which are further associated with multi-directional semantic rays. The semantic ray fronts enables beyond-range querying of open-set concepts in the unmapped region.

Our proposed planner-agnostic online-mapping evaluation metric captures how online mapping systems correctly cuts down search volume.

RayFronts is superior to all baselines across depth ranges empowering both fine-grained localization and beyond-range guidance.

RayFronts consistently outper-forms the baselines in mIoU, and achieves SOTA performance beating the next best baselines by +18.07% and +9.63% mIoU on Replica and Scannet, respectively, excluding background. RayFronts is also able to handle background seamlessly with its single-forward pass approach while segment-then-encode approaches fall short. For outdoor in-the-wild performance on TartanGround, RayFronts exceeds the performance of the baselines by 3.36% mIoU

RayFronts provides state-of-the-art mIoU & 17.5 Hz throughput on an AGX Orin. It surpasses Trident with 1.34x higher mIoU and a 16.5x speedup, while achieving 1.81x higher mIoU than NACLIP, which operates at a similar throughput. We also evaluate the full mapping throughput and achieve real time performance at 8.84 Hz on the AGX Orin.

@article{alama2025rayfronts,

title={RayFronts: Open-Set Semantic Ray Frontiers for Online Scene Understanding and Exploration},

author={Alama, Omar and Bhattacharya, Avigyan and He, Haoyang and Kim, Seungchan and Qiu, Yuheng and Wang, Wenshan and Ho, Cherie and Keetha, Nikhil and Scherer, Sebastian},

journal={arXiv preprint arXiv:2504.06994},

year={2025}}